1. The difference between the theatrical and the theoretical mime. — In the one the performance is palpable, but removed from pragmatic use, so that the mime is widely reviled out of habit, even when his actions beckon a half-hearted attention. Some wish to beat the mime to a pulp. More uncivilized spectators, containing their feral thoughts within the imagination, ruminate over whether or not the mime’s hypothetical gush of blood will be as invisible as the box that he is “trapping” himself in. One sees the mime’s principles within his performance, but the mime represents both theater and theory in practice. This causes hostility. This causes ulcers. This causes many to complain to their spouses and, in the most extreme cases, a temporary shift in slumbering receptacle from bed to couch.

1. The difference between the theatrical and the theoretical mime. — In the one the performance is palpable, but removed from pragmatic use, so that the mime is widely reviled out of habit, even when his actions beckon a half-hearted attention. Some wish to beat the mime to a pulp. More uncivilized spectators, containing their feral thoughts within the imagination, ruminate over whether or not the mime’s hypothetical gush of blood will be as invisible as the box that he is “trapping” himself in. One sees the mime’s principles within his performance, but the mime represents both theater and theory in practice. This causes hostility. This causes ulcers. This causes many to complain to their spouses and, in the most extreme cases, a temporary shift in slumbering receptacle from bed to couch.

2. But in the theoretical mime, the principles are fully separate from the theatrical. The mime neither exists nor is permitted to exist. It maintains its imaginative perch within an active noggin and proves so stubborn a resident that hostility is eked out at the theatrical mime, who shares nothing more than this subjective projection and is thereby innocent. The spectator only has to look at a real mime to be reminded of these theoretical speculations, and no real effort is necessary; for the theatrical mime’s performance is far from subtle and mimes themselves are numerous within our society.

3. All mimes would then be theoretical if they had access to the spectator’s theoretical viewpoint, or if they could indeed speak. But mimes are only permitted to convey their thoughts and feelings through silent action. And the mime rules dictate that props and gait must be invented. Since the mime is so occupied with these inherent duties, the communication between the spectator who contains the theoretical projection and the mime is one way. A mime is a terrible thing to waste, both in its theoretical and theatrical forms.

4. The reason therefore that the spectator remains so hostile to the theoretical mime is because he is not dressed up in striped shirt and his face is not attired in white paint. If the spectator is asphyxiated by a necktie as he watches the mime and his mind is occupied by negative thoughts pertaining to his work, then the spectator is likely to project additional theoretical mimes upon the theatrical mime.

5. But dull mimes are never either theatrical or theoretical.

6. The mime, if he is lively, is drawing from his own inner theoretical mime, shifting his arms and legs and chest by subconscious instinct. He therefore contains more of the theatrical mime than the theoretical mime as he carries out his performance. But it is just the reverse with the spectator. And where the spectator feels hostility towards the mime, the mime, by way of inhabiting more of the theatrical mime, feels ebullience, which he then applies to the performance.

7. Just as we harm the mime by projecting our theoretical mime upon him, so too does the theatrical mime harm the spectator in failing to project the theoretical upon us. That the theoretical forms the emotional bridge between mime and spectator, rather than the theatrical, is the chief cause for the many negative feelings directed towards the mime.

8. There are many people who witness a mime in the same way as they crave Ian Fleming’s vespers.

9. There remains the possibility of rectifying the theoretical/theatrical balance, but this will involve a good deal of mime outreach to beleaguered sectors of humanity. And since outreach is associated with many of the regrettable sensitivity and self-help movements of the 1970s, and since mimes themselves have already garnered a hostile position within civilization, the only practical solution to destroying this dichotomy is for the mimes to become spectators and the spectators to become mimes. The difficulties with establishing a World Mime Day come with the necessary autocratic enforcement. For in order for mimes to be understood as theatrical beings, it will be necessary for 90% of the spectators to become mimes. This is a difficult ratio, one that will certainly cause numerous spectators to resist and one that will cause further anti-mime propaganda to be disseminated through various circulars, several social networks, and numerous snarky websites.

10. But let us momentarily adopt an optimistic position and assume that such a possibility becomes plausible. Many of the new mimes (formerly spectators) will have difficulties adjusting to the role, and may come to resent the theatrical mime further, retreating again to the theoretical. Some may indeed decide that their roles as spectators have been balderdash all along and may become permanent mimes. But would such born again mimes be finding the right role in relation to society? It might be sufficiently argued that being a mime for a day is much better than toiling in a maquiladora. Then again, if being a mime is largely voluntary and without compensation, one might also argue the reverse.

11. Eloquence. — It requires the theatrical and the theoretical, but the theatrical must itself be drawn from the true. Eloquence is a bit like a high school blood drive, but the stakes are higher and the ambitions are tantamount to climbing Everest.

12. Eloquent responses to the mime problem therefore require one entire year, whereby the shift from spectator to mime is staggered over a 365 day period, and the many impromptu mimes scattered into everyday society is not so shocking. Governments must institute special tax incentives, encouraging spectators to become mimes and let the natural eloquence of the theatrical noodle its way into the theoretical. We must believe that mimes are more than two conditions. In this way, the spectators might overcome their internal skepticism by momentarily embracing the obverse.

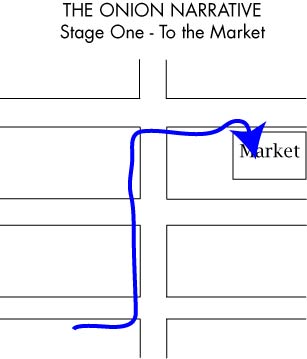

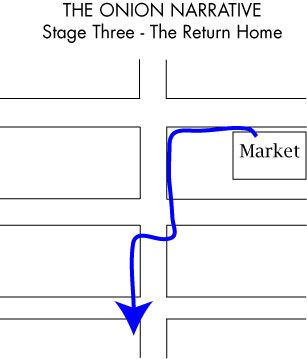

Stage Three of my journey seems less significant than the first two stages. By my own judgment, it is also the least interesting part of the video recreation. The onion has been obtained. I recall that during the original narrative, I found myself observing more people. I was not in a rush to get back. But on the video, I am circling around people rather than approaching them. I do not know if this is because of the camera or because I felt uneasy reproducing the narrative. And why should Stage Three be the least of the three? The primary goal has been obtained. The mind is at ease and can be more spontaneous with the rigid order out of the way. Or so one would think.

Stage Three of my journey seems less significant than the first two stages. By my own judgment, it is also the least interesting part of the video recreation. The onion has been obtained. I recall that during the original narrative, I found myself observing more people. I was not in a rush to get back. But on the video, I am circling around people rather than approaching them. I do not know if this is because of the camera or because I felt uneasy reproducing the narrative. And why should Stage Three be the least of the three? The primary goal has been obtained. The mind is at ease and can be more spontaneous with the rigid order out of the way. Or so one would think.