(This is the fourteenth entry in The Modern Library Nonfiction Challenge, an ambitious project to read and write about the Modern Library Nonfiction books from #100 to #1. There is also The Modern Library Reading Challenge, a fiction-based counterpart to this list. Previous entry: Six Easy Pieces.)

Clocking in at a mere ninety pages in very large type, G.H. Hardy’s A Mathematician’s Apology is that rare canapé plucked from a small salver between all the other three-course meals and marathon banquets in the Modern Library series. It is a book so modest that you could probably read it in its entirety while waiting for the latest Windows 10 update to install. And what a bleak and despondent volume it turned out to be! I read the book twice and, each time I finished the book, I wanted to seek out some chalk-scrawling magician and offer a hug.

Clocking in at a mere ninety pages in very large type, G.H. Hardy’s A Mathematician’s Apology is that rare canapé plucked from a small salver between all the other three-course meals and marathon banquets in the Modern Library series. It is a book so modest that you could probably read it in its entirety while waiting for the latest Windows 10 update to install. And what a bleak and despondent volume it turned out to be! I read the book twice and, each time I finished the book, I wanted to seek out some chalk-scrawling magician and offer a hug.

G.H. Hardy was a robust mathematician just over the age of sixty who had made some serious contributions to number theory and population genetics. He was a cricket-loving man who had brought the Indian autodidact Srinivasa Ramanujan to academic prominence by personally vouching for and mentoring him. You would think that a highly accomplished dude who went about the world with such bountiful and generous energies would be able to ride out his eccentric enthusiasm into his autumn years. But in 1939, Hardy survived a heart attack and felt that he was as useless as an ashtray on a motorcycle, possessing nothing much in the way of nimble acumen or originality. So he decided to memorialize his depressing thoughts about “useful” contributions to knowledge in A Mathematician’s Apology (in one of the book’s most stupendous understatements, Hardy observed that “my apology is bound to be to some extent egotistical”), and asked whether mathematics, the field that he had entered into because he “wanted to beat other boys, and this seemed to be the way in which I could do so most decisively,” was worthwhile.

You can probably guess how it all turned out:

It is indeed rather astonishing how little practical value scientific knowledge has for ordinary man, how dull and commonplace such of it as has value is, and how its value seems almost to vary inversely to reputed utility….We live either by rule of thumb or other people’s professional knowledge.

If only Hardy could have lived about sixty more years to discover the 21st century thinker’s parasitic relationship to Google and Wikipedia! The question is whether Hardy is right to be this cynical. While snidely observing “It is quite true that most people can do nothing well,” he isn’t a total sourpuss. He writes, “A man’s first duty, a young man’s at any rate, is to be ambitious,” and points out that ambition has been “the driving force behind nearly all the best work of the world.” What he fails to see, however, is that youthful ambition, whether in a writer or a scientist, often morphs into a set of routines that become second-nature. At a certain point, a person becomes comfortable enough with himself to simply go on with his work, quietly evolving, where the ambition becomes more covert and subconscious and mysterious.

Hardy never quite confronts what it is about youth that frightens him, but he is driven by a need to justify his work and his existence, pointing to two reasons for why people do what they do: (1) they work at something because they know they can do it well and (2) they work at something because a particular vocation or specialty came their way. But this seems too pat and Gladwellian to be a persuasive dichotomy. It doesn’t really account for the journey we all must face over why one does something, which generally includes the vital people you meet at certain places in your life who point you down certain directions. Either they recognize some talent in you and give you a leg up or they are smart and generous enough to recognize that one essential part of human duty is to help others find their way, to seek out your people — ideally a group of eclectic and vastly differing perspectives — and to work with each other to do the best damn work and live the best damn lives you can. Because what’s the point of geeking out about Fermat’s “two squares” theorem, which really is, as Hardy observes, a nifty mathematical axiom of pure beauty, if you can’t share it with others?

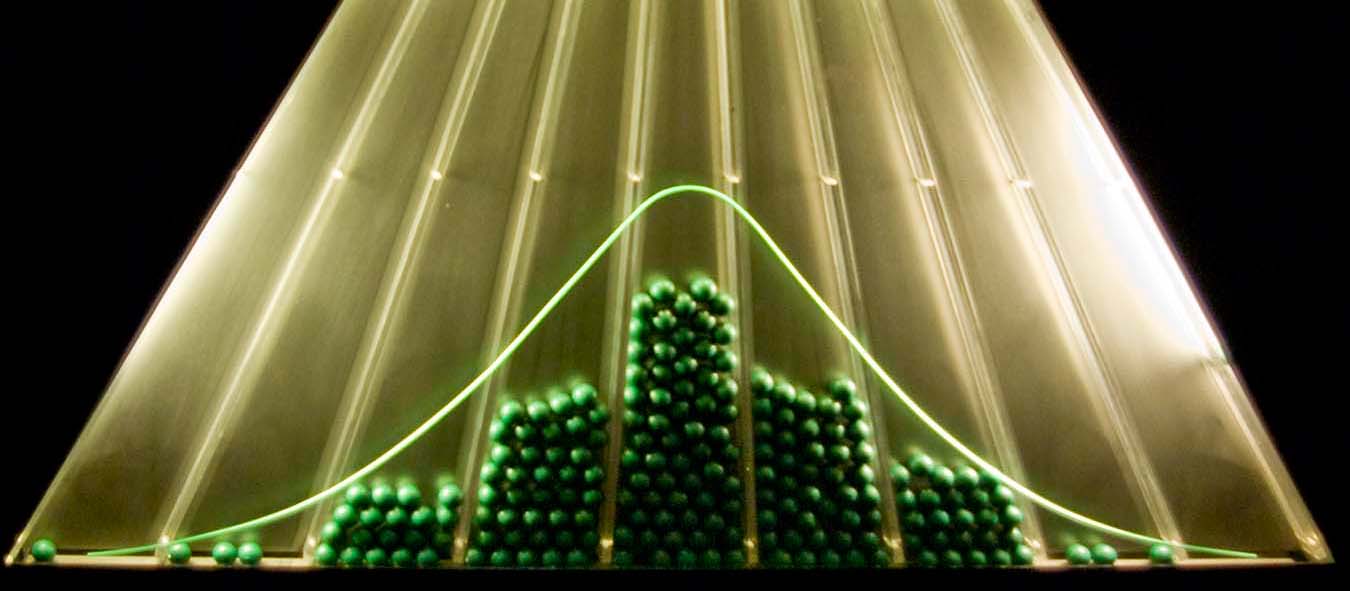

But let’s return to Hardy’s fixation on youth. Hardy makes the claim that “mathematics, more than any other art or science, is a young man’s game,” yet this staggering statement is easily debunked by such late bloomers as prime number ninja Zhang Yitang and Andrew Wiles solving Fermat’s Last Theorem at the age of 41. Even in Hardy’s own time, Henri Poincaré was making innovations to topology and Lorentz transformations well into middle age. (And Hardy explicitly references Poincaré in § 26 of his Apology. So it’s not like he didn’t know!) Perhaps some of the more recent late life contributions have much to do with forty now being the new thirty (or even the new twenty among a certain Jaguar-buying midlife crisis type) and many men in Hardy’s time believing themselves to be superannuated in body and soul around the age of thirty-five, but it does point to the likelihood that Hardy’s sentiments were less the result of serious thinking and more the result of crippling depression.

Where Richard Feynman saw chess as a happy metaphor for the universe, “a great game played by the gods” in which we humans are mere observers who “do not know what the rules of the game are,” merely allowed to watch the playing (and yet find marvel in this all the same), Hardy believed that any chess problem was “simply an exercise in pure mathematics…and everyone who calls a problem ‘beautiful’ is applauding mathematical beauty, even if is a beauty of a comparatively lowly kind.” Hardy was so sour that he compared a chess problem to a newspaper puzzle, claiming that it merely offered an “intellectual kick” for the clueless educated rabble. As someone who enjoys solving the Sunday New York Times crossword in full and a good chess game (it’s the street players I have learned the most from; for they often have the boldest and most original moves), I can’t really argue against Hardy’s claim that such pastimes are “trivial” or “unimportant” in the grand scheme of things. But Hardy seems unable to remember the possibly apocryphal tale of Archimedes discovering gradual displacement while in the bathtub or the more reliable story of Otto Loewi’s dream leading the great Nobel-winning physiologist to discover that nervous impulses arose from electrical transmissions. Great minds often need to be restfully thinking or active on other fronts in order to come up with significant innovations. And while Hardy may claim that “no chess problem has ever affected the development of scientific thought,” I feel compelled to note Pythagoras played the lyre (and even inspired a form of tuning), Newton had his meandering apple moment, and Einstein enjoyed hiking and sailing. These were undoubtedly “trivial” practices by Hardy’s austere standards, but would these great men have given us their contributions if they hadn’t had such downtime?

It’s a bit gobsmacking that Hardy never mentions how Loewi was fired up by his dreams. He seems only to see value in Morpheus’s prophecies if they are dark and melancholic:

I can remember Bertrand Russell telling me of a horrible dream. He was in the top floor of the University Library, about A.D. 2100. A library assistant was going round the shelves carrying an enormous bucket, taking down book after book, glancing at them, restoring them to the shelves or dumping them into the bucket. At last he came to three large volumes which Russell could recognize as the last surviving copy of Principia mathematica. He took down one of the volumes, turned over a few pages, seemed puzzled for a moment by the curious symbolism, closed the volume, balanced it in his hand and hesitated….

One of an author’s worst nightmares is to have his work rendered instantly obsolescent not long after his death, even though there is a very strong likelihood that, in about 150 years, few people will care about the majority of books published today. (Hell, few people care about anything I have to write today, much less this insane Modern Library project. There is a high probability that I will be dead in five decades and that nobody will read the many millions of words or listen to the countless hours of radio I have put out into the universe. It may seem pessimistic to consider this salient truth, but, if anything, it motivates me to make as much as I can in the time I have, which I suppose is an egotistical and foolishly optimistic approach. But what else can one do? Deposit one’s head in the sand, smoke endless bowls of pot, wolf down giant bags of Cheetos, and binge-watch insipid television that will also not be remembered?) You can either accept this reality and reach the few people you can and find happiness and gratitude in doing so. Or you can deny the clear fact that your ego is getting in the way of your achievements, embracing supererogatory anxieties and forcing you to spend too much time feeling needlessly morose.

I suppose that in articulating this common neurosis, Hardy is performing a service. He seems to relish “mathematical fame,” which he calls “one of the soundest and steadiest of investments.” Yet fame is a piss-poor reason to go about making art or formulating theorems. Most of the contributions to human advancement are rendered invisible. These are often small yet subtly influential rivulets that unknowingly pass into the great river that future generations will wade in. We fight for virtues and rigor and intelligence and truth and justice and fairness and equality because this will be the legacy that our children and grandchildren will latch onto. And we often make unknowing waves. Would we, for example, be enjoying Hamilton today if Lin-Manuel Miranda’s school bus driver had not drilled him with Geto Boys lyrics? And if we capitulate those standards, if we gainsay the “trivial” inspirations that cause others to offer their greatness, then we say to the next generation, who are probably not going to be listening to us, that fat, drunk, and stupid is the absolute way to go through life, son.

A chair may be a collection of whirling electrons, or an idea in the mind of God: each of these accounts of it may have its merits, but neither conforms at all closely to the suggestions of common sense.

This is Hardy suggesting some church and state-like separation between pure and applied mathematics. He sees physics as fitting into some idealistic philosophy while identifying pure mathematics as “a rock on which all idealism flounders.” But might not one fully inhabit common sense if the chair exists in some continuum beyond this either-or proposition? Is not the chair’s perceptive totality worth pursuing?

It is at this point in the book where Hardy’s argument really heads south and he makes an astonishingly wrongheaded claim, one that he could not have entirely foreseen, noting that “Real mathematics has no effects on war.” This was only a few years before Los Alamos was to prove him wrong. And that’s not all:

It can be maintained that modern warfare is less horrible than the warfare of pre-scientific times; that bombs are probably more merciful than bayonets; that lachrymatory gas and mustard gas are perhaps the most humane weapons yet devised by military science; and that the orthodox view rests solely on loose-thinking sentimentalism.

Oh Hardy! Hiroshima, Nagasaki, Agent Orange, Nick Ut’s famous napalm girl photo from Vietnam, Saddam Hussein’s chemical gas massacre in Halabja, the use of Sarin-spreading rockets in Syria. Not merciful. Not humane. And nothing to be sentimental about!

Nevertheless, I was grateful to argue with this book on my second read, which occurred a little more than two weeks after the shocking 2016 presidential election. I had thought myself largely divested of hope and optimism, with the barrage of headlines and frightening appointments (and even Trump’s most recent Taiwan call) doing nothing to summon my natural spirits. But Hardy did force me to engage with his points. And his book, while possessing many flawed arguments, is nevertheless a fascinating insight into a man who gave up: a worthwhile and emotionally true Rorschach test you may wish to try if you need to remind yourself why you’re still doing what you’re doing.

Next Up: Tobias Wolff’s This Boy’s Life!

Correspondent: Going back to the idea of the general reader, or the common reader — whatever we want to call the audience here — the philosophical proposition involving the fly, the bat, and the worm expressing basic cognitive abilities, and how cognitive abilities come together so that humans are a higher form of animal than other animals, this was a very clear way of expressing this particular concept of individual senses. And I’m wondering if this was something that you concocted. Or that you took from Kant. Because I actually tried to find a philosophical precedent for this as well.

Correspondent: Going back to the idea of the general reader, or the common reader — whatever we want to call the audience here — the philosophical proposition involving the fly, the bat, and the worm expressing basic cognitive abilities, and how cognitive abilities come together so that humans are a higher form of animal than other animals, this was a very clear way of expressing this particular concept of individual senses. And I’m wondering if this was something that you concocted. Or that you took from Kant. Because I actually tried to find a philosophical precedent for this as well.